fairlearn.preprocessing.CorrelationRemover#

- class fairlearn.preprocessing.CorrelationRemover(*, sensitive_feature_ids=None, alpha=1)[source]#

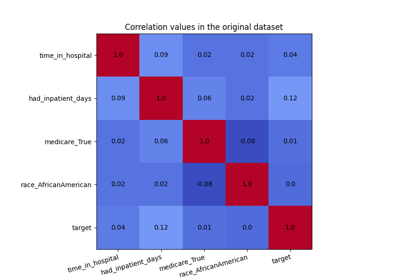

A component that filters out sensitive correlations in a dataset.

CorrelationRemover applies a linear transformation to the non-sensitive feature columns in order to remove their correlation with the sensitive feature columns while retaining as much information as possible (as measured by the least-squares error).

Read more in the User Guide.

- Parameters:

- sensitive_feature_idslist

list of columns to filter out this can be a sequence of either int ,in the case of numpy, or string, in the case of pandas.

- alphafloat

parameter to control how much to filter, for alpha=1.0 we filter out all information while for alpha=0.0 we don’t apply any.

Notes

This method will change the original dataset by removing all correlation with sensitive values. To describe that mathematically, let’s assume in the original dataset \(X\) we’ve got a set of sensitive attributes \(S\) and a set of non-sensitive attributes \(Z\). Mathematically this method will be solving the following problem.

\[\begin{split}\min _{\mathbf{z}_{1}, \ldots, \mathbf{z}_{n}} \sum_{i=1}^{n}\left\|\mathbf{z}_{i} -\mathbf{x}_{i}\right\|^{2} \\ \text{subject to} \\ \frac{1}{n} \sum_{i=1}^{n} \mathbf{z}_{i}\left(\mathbf{s}_{i}-\overline{\mathbf{s}} \right)^{T}=\mathbf{0}\end{split}\]The solution to this problem is found by centering sensitive features, fitting a linear regression model to the non-sensitive features and reporting the residual.

The columns in \(S\) will be dropped but the hyper parameter \(\alpha\) does allow you to tweak the amount of filtering that gets applied.

\[X_{\text{tfm}} = \alpha X_{\text{filtered}} + (1-\alpha) X_{\text{orig}}\]Note that the lack of correlation does not imply anything about statistical dependence. Therefore, we expect this to be most appropriate as a preprocessing step for (generalized) linear models.

New in version 0.6.

- fit(X, y=None)[source]#

Learn the projection required to make the dataset uncorrelated with sensitive columns.

- fit_transform(X, y=None, **fit_params)[source]#

Fit to data, then transform it.

Fits transformer to X and y with optional parameters fit_params and returns a transformed version of X.

- Parameters:

- Xarray-like of shape (n_samples, n_features)

Input samples.

- yarray-like of shape (n_samples,) or (n_samples, n_outputs), default=None

Target values (None for unsupervised transformations).

- **fit_paramsdict

Additional fit parameters.

- Returns:

- X_newndarray array of shape (n_samples, n_features_new)

Transformed array.

- get_metadata_routing()[source]#

Get metadata routing of this object.

Please check User Guide on how the routing mechanism works.

- Returns:

- routingMetadataRequest

A

MetadataRequestencapsulating routing information.

- get_params(deep=True)[source]#

Get parameters for this estimator.

- Parameters:

- deepbool, default=True

If True, will return the parameters for this estimator and contained subobjects that are estimators.

- Returns:

- paramsdict

Parameter names mapped to their values.

- set_output(*, transform=None)[source]#

Set output container.

See Introducing the set_output API for an example on how to use the API.

- Parameters:

- transform{“default”, “pandas”, “polars”}, default=None

Configure output of transform and fit_transform.

“default”: Default output format of a transformer

“pandas”: DataFrame output

“polars”: Polars output

None: Transform configuration is unchanged

New in version 1.4: “polars” option was added.

- Returns:

- selfestimator instance

Estimator instance.

- set_params(**params)[source]#

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters:

- **paramsdict

Estimator parameters.

- Returns:

- selfestimator instance

Estimator instance.