Get Started#

Installation#

Fairlearn can be installed with pip from

PyPI as follows:

pip install fairlearn

Fairlearn is also available on conda-forge:

conda install -c conda-forge fairlearn

For further information on how to install Fairlearn and its optional dependencies, please check out the Installation Guide.

If you are updating from a previous version of Fairlearn, please see Version guide.

Note

The Fairlearn API is still evolving, so example code in

this documentation may not work with every version of Fairlearn.

Please use the version selector to get to the instructions for

the appropriate version. The instructions for the main

branch require Fairlearn to be installed from a clone of the

repository.

Overview of Fairlearn#

The Fairlearn package has two components:

Metrics for assessing which groups are negatively impacted by a model, and for comparing multiple models in terms of various fairness and accuracy metrics.

Algorithms for mitigating unfairness in a variety of AI tasks and along a variety of fairness definitions.

Fairlearn in 10 minutes#

The Fairlearn toolkit can assist in assessing and mitigation unfairness in Machine Learning models. It’s impossible to provide a sufficient overview of fairness in ML in this Quickstart tutorial, so we highly recommend starting with our User Guide. Fairness is a fundamentally sociotechnical challenge and cannot be solved with technical tools alone. They may be helpful for certain tasks such as assessing unfairness through various metrics, or to mitigate observed unfairness when training a model. Additionally, fairness has different definitions in different contexts and it may not be possible to represent it quantitatively at all.

Given these considerations this Quickstart tutorial merely provides short code snippet examples of how to use basic Fairlearn functionality for those who are already intimately familiar with fairness in ML. The example below is about binary classification, but we similarly support regression.

Prerequisites#

In order to run the code samples in the Quickstart tutorial, you need to install the following dependencies:

pip install fairlearn matplotlib

Loading the dataset#

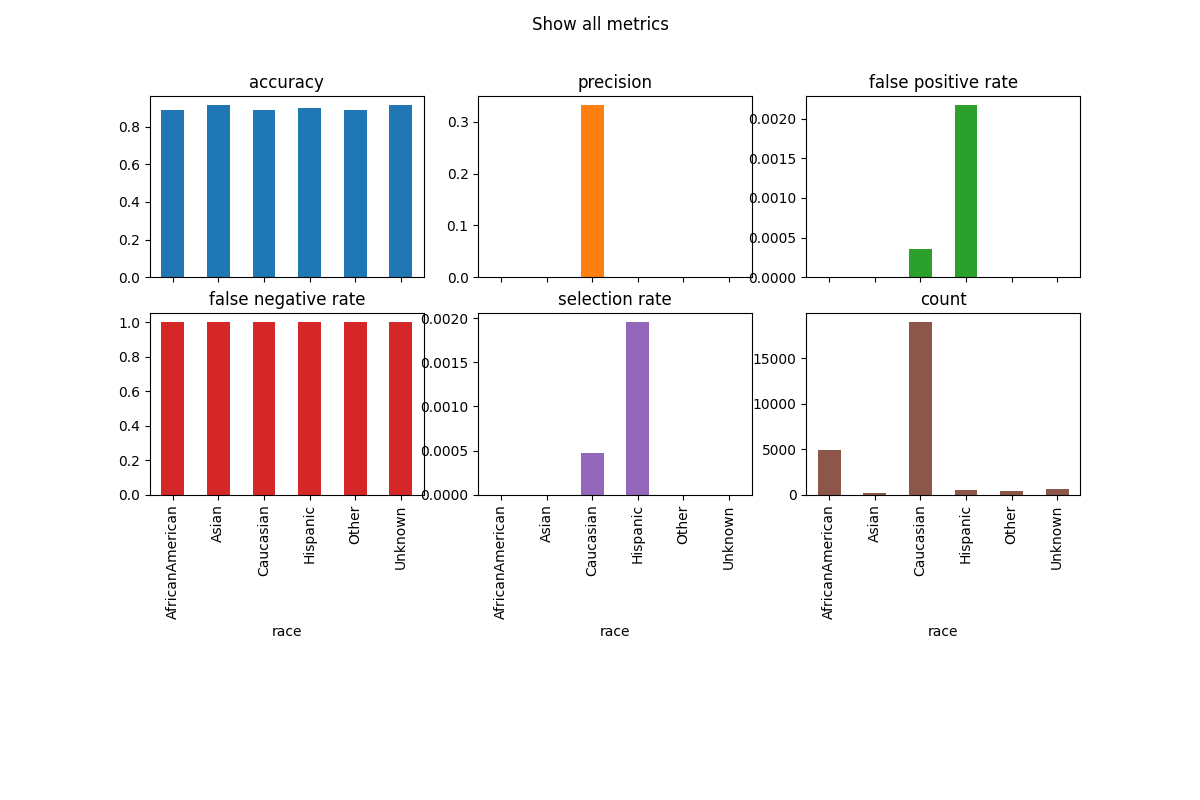

For this example, we use a clinical dataset of hospital re-admissions over a ten-year period (1998-2008) for diabetic patients across 130 different hospitals in the U.S. This scenario builds upon prior research on how racial disparities impact health care resource allocation in the U.S. For an in-depth analysis of this dataset, review the SciPy tutorial that the Fairlearn team presented in 2021.

We will use machine learning to predict whether an individual in the dataset is readmitted to the hospital within 30 days of hospital release. A hospital readmission within 30 days can be viewed as a proxy that the patients needed more assistance at the release time. In the next section, we build a classification model to accomplish the prediction task.

>>> import numpy as np

>>> import pandas as pd

>>> import matplotlib.pyplot as plt

>>> from fairlearn.datasets import fetch_diabetes_hospital

>>> data = fetch_diabetes_hospital(as_frame=True)

>>> X = data.data.copy()

>>> X.drop(columns=["readmitted", "readmit_binary"], inplace=True)

>>> y = data.target

>>> X_ohe = pd.get_dummies(X)

>>> race = X['race']

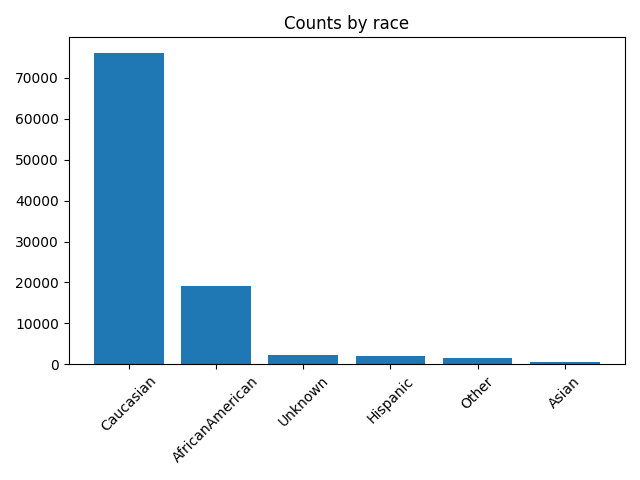

>>> race.value_counts()

race

Caucasian 76099

AfricanAmerican 19210

Unknown 2273

Hispanic 2037

Other 1506

Asian 641

Name: count, dtype: int64

Mitigating disparity#

If we observe disparities between groups we may want to create a new model while specifying an appropriate fairness constraint. Note that the choice of fairness constraints is crucial for the resulting model, and varies based on application context. Since both false positives and false negatives are relevant for fairness in this hypothetical example, we can attempt to mitigate the observed disparity using the fairness constraint called Equalized Odds, which bounds disparities in both types of error. In real world applications we need to be mindful of the sociotechnical context when making such decisions. The Exponentiated Gradient mitigation technique used fits the provided classifier using Equalized Odds as the constraint and a suitably weighted Error Rate as the objective, leading to a vastly reduced difference in accuracy:

>>> from fairlearn.reductions import ErrorRate, EqualizedOdds, ExponentiatedGradient

>>> objective = ErrorRate(costs={'fp': 0.1, 'fn': 0.9})

>>> constraint = EqualizedOdds(difference_bound=0.01)

>>> classifier = DecisionTreeClassifier(min_samples_leaf=10, max_depth=4)

>>> mitigator = ExponentiatedGradient(classifier, constraint, objective=objective)

>>> mitigator.fit(X_train, y_train, sensitive_features=A_train)

ExponentiatedGradient(...)

>>> y_pred_mitigated = mitigator.predict(X_test)

>>> mf_mitigated = MetricFrame(metrics=accuracy_score, y_true=y_test, y_pred=y_pred_mitigated, sensitive_features=A_test)

>>> mf_mitigated.overall.item()

0.5251...

>>> mf_mitigated.by_group

race

AfricanAmerican 0.524358

Asian 0.562874

Caucasian 0.525588

Hispanic 0.549902

Other 0.478873

Unknown 0.511864

Name: accuracy_score, dtype: float64

Note that ExponentiatedGradient does not have a predict_proba

method, but we can adjust the target decision threshold by specifying

(possibly unequal) costs for false positives and false negatives.

In our example we use the cost of 0.1 for false positives and 0.9 for false negatives.

Without fairness constraints, this would exactly correspond to

referring patients with the readmission risk of 10% or higher

(as we used earlier).

What’s next?#

Please refer to our User Guide for a comprehensive view on Fairness in Machine Learning and how Fairlearn fits in, as well as an exhaustive guide on all parts of the toolkit. For concrete examples check out the Example Notebooks section. Finally, we also have a collection of Frequently asked questions.